#TakviyeliÖğrenme ( #ReinforcementLearning ) nasıl olduğunu merak edenlere 😉

Credit Kalilur Rahman

人型ロボットのロバスト性実験

壊れた足部で歩かせてみる…

lnkd.in/dFtMZX7V

#bipedal #humanoid #robot #locomotion #robustness #ImitationLearning #ReinforcementLearning #Adam #PNDbotics #模倣学習 #強化学習

Even as we keep moving the object our trained robot arm finds it and picks it up autonomously on its own #robot #robot s #robot ics #robot arm #reinforcementlearning #deeplearning #machinelearning #AI #artificialintelligence #homerobot #everydayrobot

I was asked about how we intend to use AI / Machine Learning in the game. 🤖🤔

Here's a video of the training environment and a summary of what we are building.

🧵1/6 👇

#machinelearning #ai #reinforcementlearning #madewithunity #indiegame #gamedevelopment

While I crunch numbers, my AI sidekick maps its surroundings with sophisticated sensors and algorithms 👌

#MachineLearning #ArtificialIntelligence #ReinforcementLearning #DeepLearning

La presentación del Blackwell la GPU de NVIDIA mas poderosa creada hasta el momento

.

#IA #AI #GPUs #NVIDIA #Tecnología #ReinforcementLearning #AI #BigGPU #Technology

🤖 Se utilizará aprendizaje por refuerzo, la IA trabajará con IA entrenándose mutuamente y esto aumentará el tamaño del modelo y datos. Para ello, se presenta una GPU NVIDIA muy grande. 🧠

#AI #IA #GPUs #NVIDIA #Tecnología #ReinforcementLearning #AI #BigGPUs #Technology

Distinction Between Data Science, AI, and Machine Learning: Revealed bit.ly/3y3rzCk #DataScience #MachineLearning #ArtificialIntelligence #Healthcare #Reinforcementlearning #SmartSystems

'Mastering Financial Trading — A Deep Dive into #AI , Investment, Robot Trading, and Arbitrage Strategies': amzn.to/42X1Erv by Ali Sermet Tasdogen

—————

#AlgorithmicTrading #DataScience #MachineLearning #ReinforcementLearning #Fintech #Crypto

Had a great time at the Reinforcement Learning Summer School at UPF Barcelona this year with my fellow researchers 😎 #RLSS #reinforcementlearning #deeplearning

二足歩行ロボットのテスト

リアルタイムの地形認識に基づいて、一歩の歩幅で階段を昇降

youtu.be/V98ru3ILkwo

#bipedal #humanoid #robot #robust #locomotion #bipedal walking #ReinforcementLearning #LimXDynamics #人型ロボット

🚀 Exciting news! Our latest research explores deep reinforcement learning for modelling protein complexes, proposing the innovative GAPN framework to tackle complex challenges. Read more at: bit.ly/3WqI4T9 #ProteinModelling #ReinforcementLearning

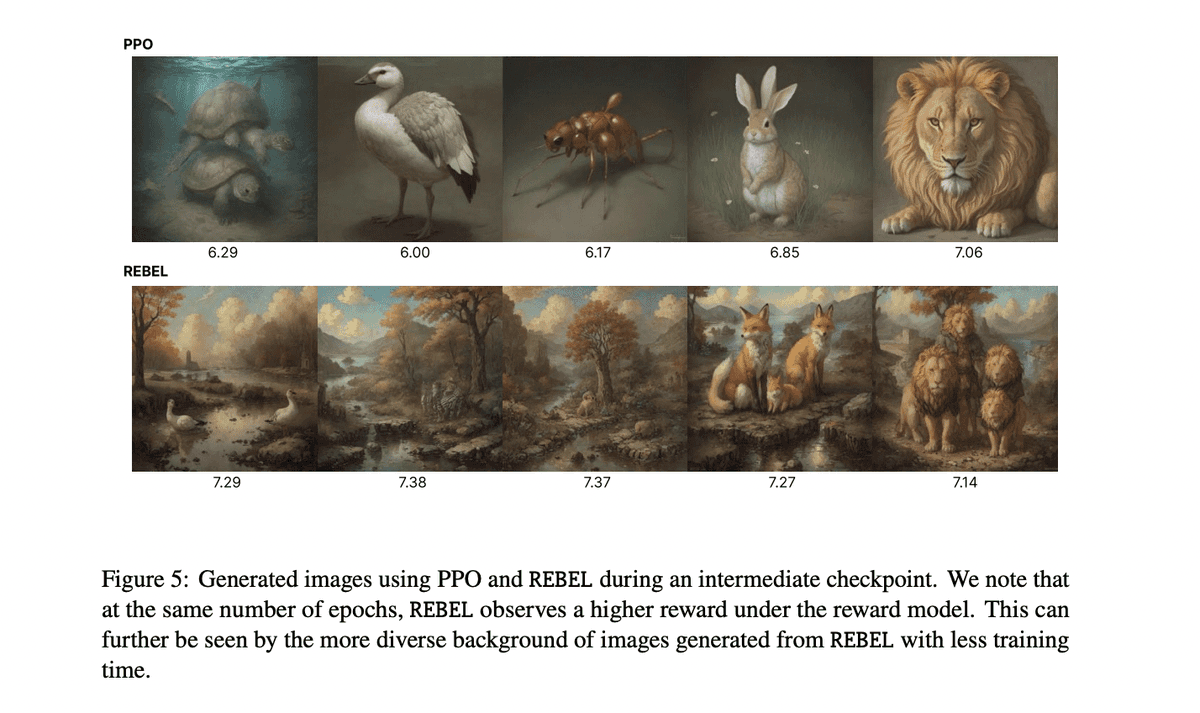

REBEL: A Innovative Approach to Reinforcement Learning in Modern Applications

#AI #algorithm #artificialintelligence #CarnegieMellonUniversity #Cornell #DPO #GPT4evaluation #llm #machinelearning #PPO #Princeton #Rebel #ReinforcementLearning #RMscore

multiplatform.ai/rebel-a-innova…

Check out our #ICRA2024 paper 'Actor-Critic Model Predictive Control.' Model-free #reinforcementlearning (RL) is known for its strong task performance and flexibility in optimizing general reward formulations. On the other hand, #ModelPredictiveControl (MPC) benefits from…

AIを搭載した人型ロボットが複雑な作業をスムーズにこなす

この手先の器用さは、台所での素晴らしいヘルパーになるかもしれない

lnkd.in/gzy8rGSH

#humanoid #robot #ImitationLearning #AI #ReinforcementLearning #Astribot_Inc #Astribot_S1 #模倣学習 #強化学習

#RL4AA24 has been a great experience!

I was able to talk about my research at IFMIF-DONES and learn more about autonomous accelerators.

Congratulations to the organisers and long live to #ReinforcementLearning ! 🤖❤️

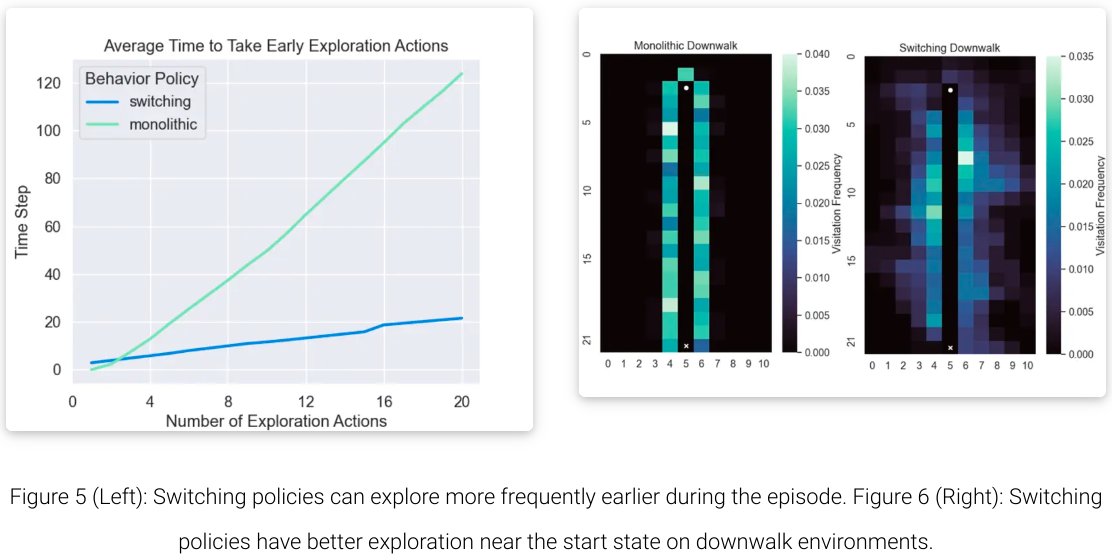

Mode-switching policies offer amazing flexibility in #ReinforcementLearning !🤖Loren Anderson's #ICLR2024 blog post provides a great analysis, building on our 'When should agents explore?' paper. See what makes these policies so adaptable:

iclr-blogposts.github.io/2024/blog/mode…

Thank you, Loren!

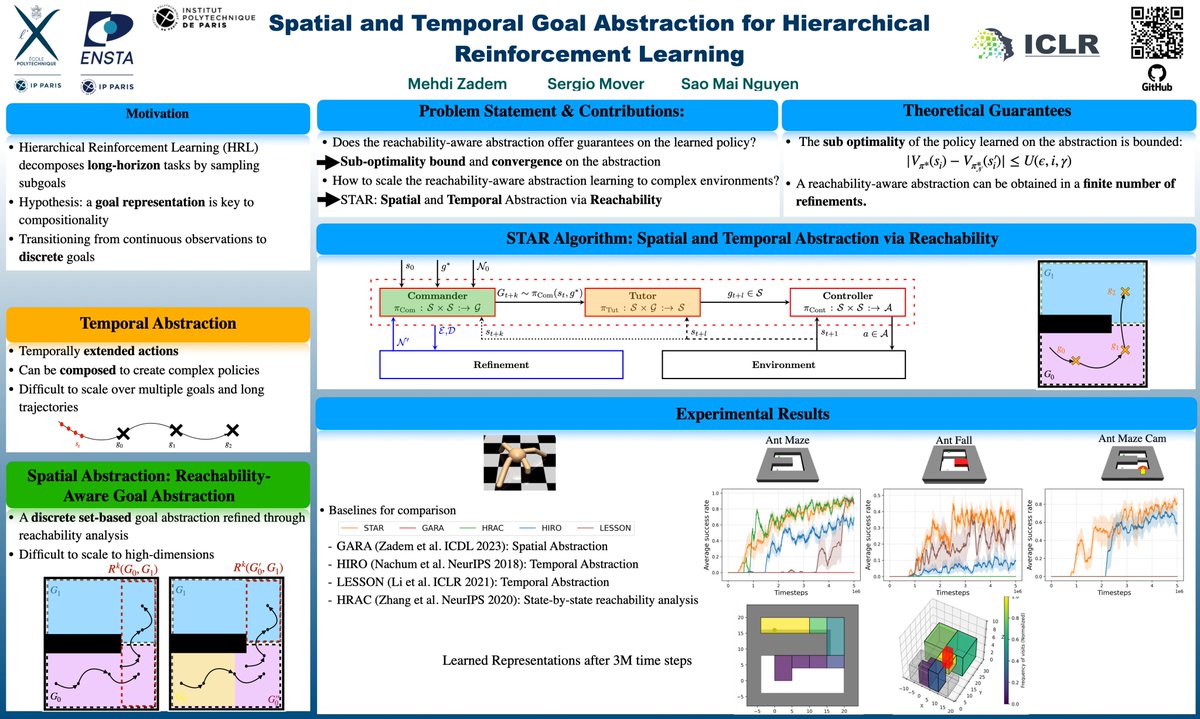

A symbolic representation of tasks is key for compositionality. Our goal-conditioned hierarchical #ReinforcementLearning algo STAR learns online a discrete representation of continuous sensorimotor space #ReachabilityAnalysis #ContinualLearning #ICLR2024

👉openreview.net/pdf?id=odY3PkI…

👌[Download 541-page PDF eBook] Understanding #DeepLearning : udlbook.github.io/udlbook/ by Simon Prince👈

V/ Kirk Borne

#AI #artificialintelligence #genai #BigData #DataScience #LLM #ML #MachineLearning #NeuralNetworks #ReinforcementLearning #NLProc #ComputerVision #Algorithms …

![Dr. Debashis Dutta (@debashis_dutta) on Twitter photo 2024-03-09 17:47:42 👌[Download 541-page PDF eBook] Understanding #DeepLearning: udlbook.github.io/udlbook/ by @SimonPrinceAI👈

V/ @KirkDBorne

#AI #artificialintelligence #genai #BigData #DataScience #LLM #ML #MachineLearning #NeuralNetworks #ReinforcementLearning #NLProc #ComputerVision #Algorithms… 👌[Download 541-page PDF eBook] Understanding #DeepLearning: udlbook.github.io/udlbook/ by @SimonPrinceAI👈

V/ @KirkDBorne

#AI #artificialintelligence #genai #BigData #DataScience #LLM #ML #MachineLearning #NeuralNetworks #ReinforcementLearning #NLProc #ComputerVision #Algorithms…](https://pbs.twimg.com/media/GIPvHixWIAASBwh.jpg)