Yıl #2016 #elonmusk #samaltman #gregbrockman #ilyasutskever #johnschulman ve #wojciechzaremba Chatgpt' nin temelini atıyor ve #openai yı kuruyor.

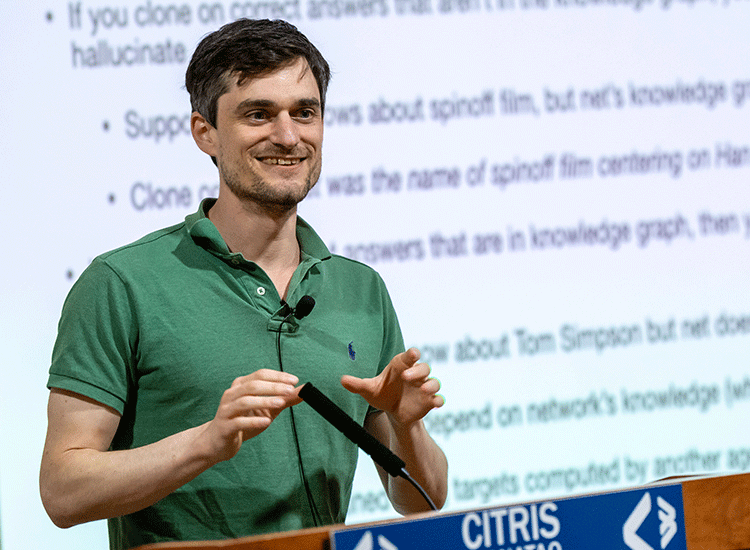

𝘑𝘰𝘩𝘯 𝘚𝘤𝘩𝘶𝘭𝘮𝘢𝘯 (𝘖𝘱𝘦𝘯𝘈𝘐 𝘊𝘰𝘧𝘰𝘶𝘯𝘥𝘦𝘳) - 𝘍𝘶𝘵𝘶𝘳𝘦 𝘤𝘩𝘢𝘭𝘭𝘦𝘯𝘨𝘦𝘴 𝘢𝘯𝘥 𝘰𝘱𝘱𝘰𝘳𝘵𝘶𝘯𝘪𝘵𝘪𝘦𝘴 𝘪𝘯 𝘈𝘐 𝘥𝘦𝘷𝘦𝘭𝘰𝘱𝘮𝘦𝘯𝘵

youtube.com/watch?v=Wo95ob…

#ai #artificialintelligence #futureofai #johnschulman

@ChatGPTNFTs Elon Musk, #SamAltman #GregBrockman , #IlyaSutskever , #JohnSchulman , and #WojciechZaremba i found this mistake

Elon Musk We Thanks To All Several high-profile individuals.

@ElonMusk

@SamAltman

Greg Brockman

@llyaSutskever

@JohnSchulman

Wojciech Zaremba

2023TL人間学シンポジウム 第3の「まさか」ChatGPTの登場と「魂の学」の必然

一昨年に取得したBerkeleyのAIのコースで教えてもらった先生の研究室でChatGPTが生まれており不思議な因縁です

buyan77.com/blog/2023/10/t… #ChatGPT #PieterAbbeel #JohnSchulman

Berkeley Alumnus #JohnSchulman to Lead Series on Artificial Intelligence #AI #BerkeleyNews

news.berkeley.edu/2023/03/10/ai-…

#GoogleBard #ChatGPT Will likely draw on #WikipediaWikidata (which WorldUnivandSch has been in since 2015) in 300 #WUaSlanguages (& planning 7151 living languages), Sandhini Agarwal, #LiamFedus , #JanLeike , #JohnSchulman , Denny Vrandečić DennyVrandecic, #PeterNorvig #GoogleWUaSAI ?

Percy Liang Aidan Clark from our recently released evals repo github.com/openai/simple-…

Ethan Mollick We'll post some release notes in a day or two. We were just a bit uncoordinated about getting everything ready at once, and we didn't want to further delay getting the new model out to developers.

David Krueger That's inconsistent with my recollection of Greg's views, and it doesn't sound like something Greg would say even if he did disagree with other people on the team

Michael Nielsen A relevant idea from Vitalik: that coordination can be good and bad, so as a mechanism designer, you want to control what sizes of groups are able to coordinate/collude vitalik.eth.limo/general/2020/0…

Nick Dobos currently we don't show max_tokens to the model, but we plan to (as described in the model spec). we do think that laziness is partly caused by the model being afraid to run out of tokens, as it gets penalized for that during training

ChatGPT യുടെ അത്ഭുതലോകം

ഗൂഗിളിന്റെ അന്ത്യം കുറിക്കുമോ? #ChatGPT #OpenAI #Google #SamAltman #ElonMusk #GregBrockman #IlyaSutskever #JohnSchulman mediaoneonline.com/mediaone-shelf…