onnxruntime

@onnxruntime

Cross-platform training and inferencing accelerator for machine learning models.

ID:1041831598415523841

http://onnxruntime.ai 17-09-2018 23:29:44

291 Tweets

1,3K Followers

43 Following

Run PyTorch models in the browser, on mobile and desktop, with #onnxruntime , in your language and development environment of choice 🚀onnxruntime.ai/blogs/pytorch-…

#ONNX Runtime saved the day with our interoperability and ability to run locally on-client and/or cloud! Our lightweight solution gave them the performance they needed with quantization & configuration tooling. Learn how they achieved this in this blog!

cloudblogs.microsoft.com/opensource/202…

Join us live TODAY! We will be talking to Akhila Vidiyala and Devang Aggarwal on AI Show with Cassie! We will show how developers can use #huggingface #optimum #Intel to quantize models and then use #OpenVINO for #ONNXRuntime to accelerate performance.

👇

aka.ms/aishowlive

Imagine the frustration of, after applying optimization tricks, finding that the data copying to GPU slows down your 'MUST-BE-FAST' inference...🥵

🤗 Optimum v1.5.0 added onnxruntime IOBinding support to reduce your memory footprint.

👀 github.com/huggingface/op…

More ⬇️

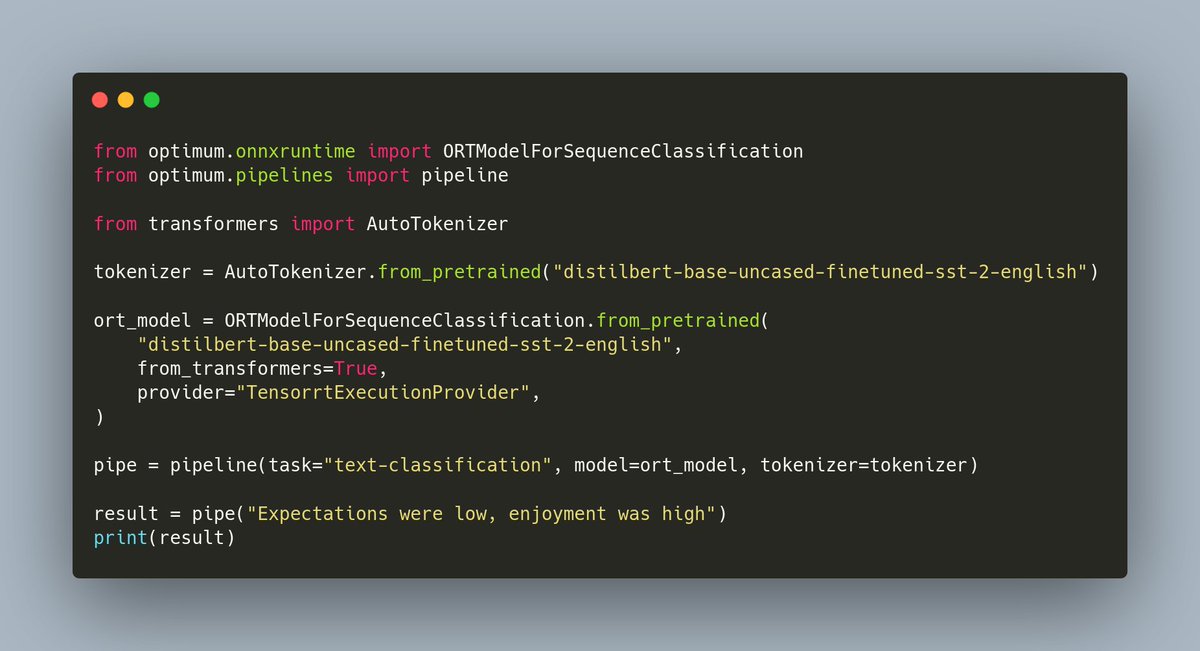

Want to use TensorRT as your inference engine for its speedups on GPU but don't want to go into the compilation hassle? We've got you covered with 🤗 Optimum! With one line, leverage TensorRT through onnxruntime! Check out more at hf.co/docs/optimum/o…

📣The new version of #ONNXRuntime v1.13.0 was just released!!!

Check out the release note and video from the engineering team to learn more about what was in this release!

📝github.com/microsoft/onnx…

📽️youtu.be/vo9vlR-TRK4

Finally tokenization with Sentence Piece BPE now works as expected in #NodeJS #JavaScript with tokenizers library 🚀! Now getting 'invalid expand shape' errors when passing text tokens' encoded ids to the MiniLM onnxruntime converted Microsoft Research model huggingface.co/microsoft/Mult…

🏭 The hardware optimization floodgates are open!🔥

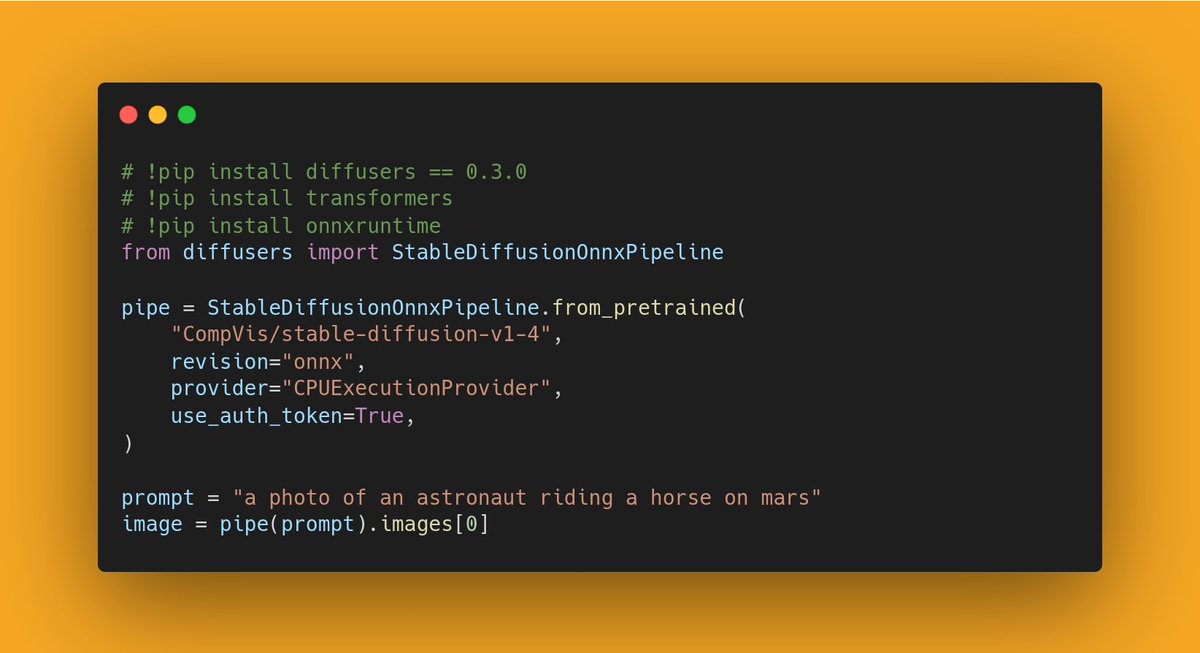

Diffusers 0.3.0 supports an experimental ONNX exporter and pipeline for Stable Diffusion 🎨

To find out how to export your own checkpoint and run it with onnxruntime, check the release notes:

github.com/huggingface/di…

💡Senior Research & Development Engineer per Deltatre, @tinux80 è anche #MicrosoftMVP e Intel Software Innovator.

📊Non perderti il suo speech su #AzureML e #Onnx Runtime a #WPC2022 !

👉𝐀𝐜𝐪𝐮𝐢𝐬𝐭𝐚 𝐢𝐥 𝐭𝐮𝐨 𝐛𝐢𝐠𝐥𝐢𝐞𝐭𝐭𝐨: wpc2022.eventbrite.it

Microsoft Italia

Gerald Versluis What about a video on ONNX runtime?

Here is the official documentation devblogs.microsoft.com/xamarin/machin…

And MAUI example:

github.com/microsoft/onnx…

The natural language processing library Apache OpenNLP is now integrated with ONNX Runtime! Get the details and a tutorial explaining its use on the blog: msft.it/6013jfemt #OpenSource

In this article, a community member used #ONNXRuntime to try out GPT-2 model which generates English sentences from Ruby language:

dev.to/kojix2/text-ge…

Come join us for the hands on lab(September 28, 1-3pm)to learn about accelerating your ML models via ONNXRunTime frameworks on Intel CPUs and GPUs..some surprise goodies as well #IntelON #iamintel #intelarc Intel Graphics Intel Software Lisa Pearce

intel.com/content/www/us…