John 👁💚🔮⚕⚡☀️♻️🌳🔸

@johnhanacek

#XR Interaction Designer @nanome_inc ◇ He/Him/They/Xyr ◇ Writer #RegenerativeFutures Artist ◇ Infinite Player ◇ @GlobalCoLabNet @Avatar_MEDIC @CanCoverIt

ID:979389758

https://jhanacek.net 29-11-2012 23:48:40

11,6K Tweets

1,7K Followers

5,0K Following

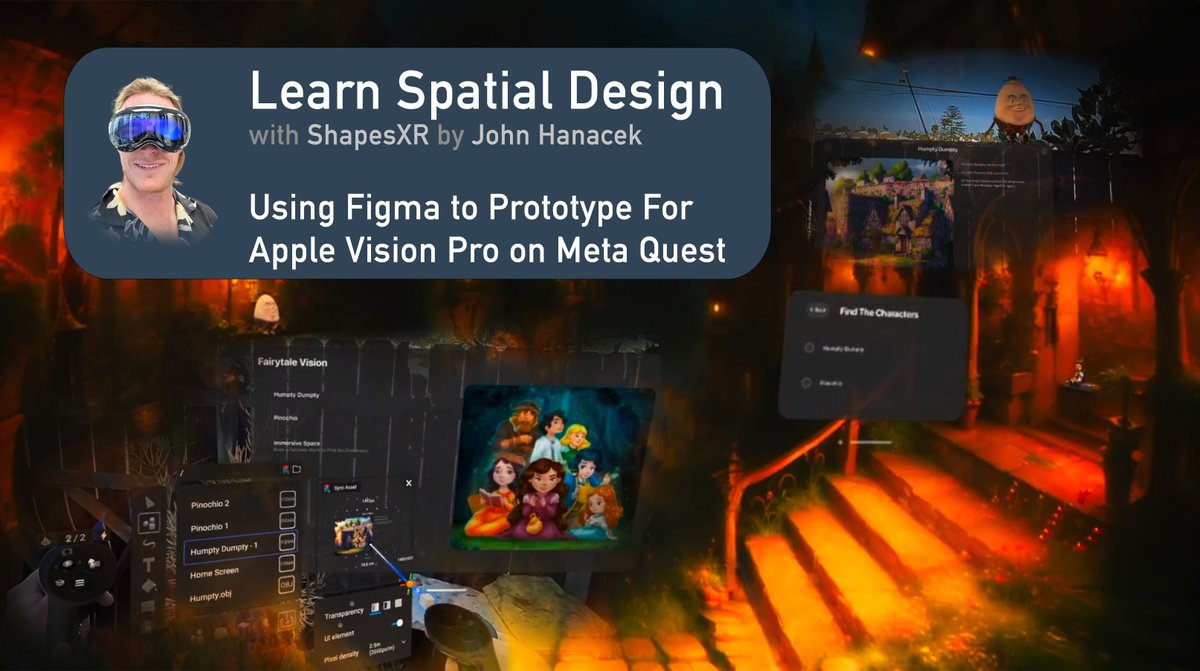

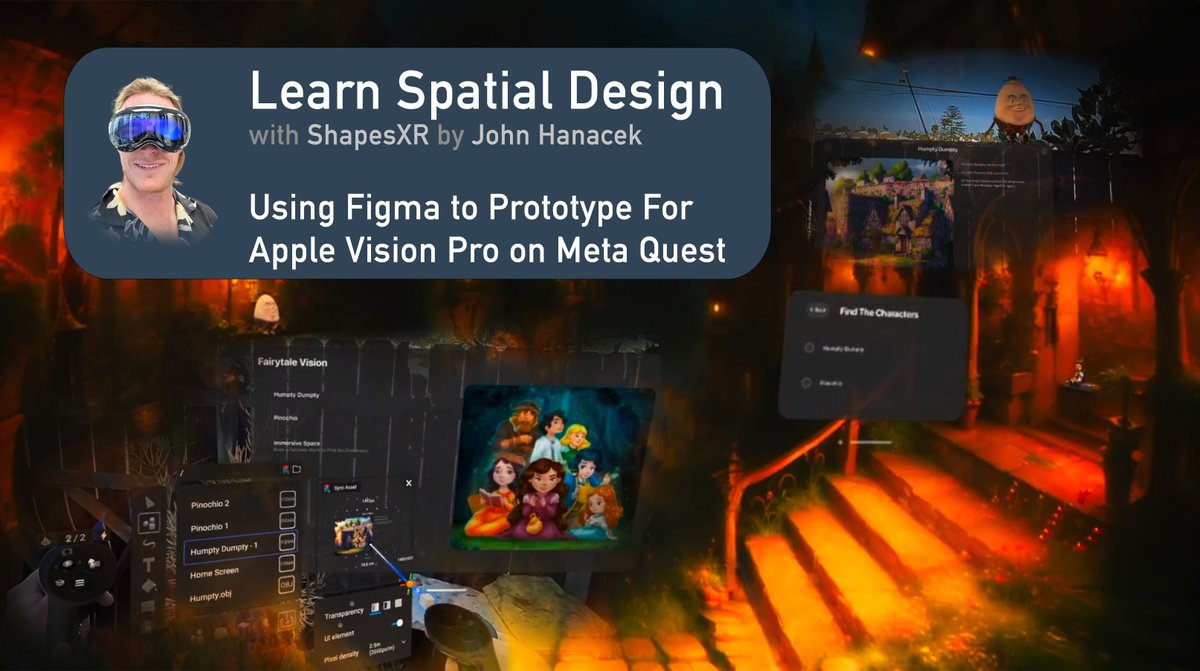

Learn how to #design #spatialcomputing apps and games directly on your Quest with John 👁💚🔮⚕⚡☀️♻️🌳🔸 👉 medium.com/John 👁💚🔮⚕⚡☀️♻️🌳🔸/l…

In the article, he goes through how to import #UI from #Figma , prototype volumes and even full spaces on #VisionPro .

Check it out you are going to love it

Do not miss this comprehensive walkthrough from John 👁💚🔮⚕⚡☀️♻️🌳🔸 on how to #design for #VisionOS without a #VisionPro 😉.

Learn how to import your #UI , and prototype windows, volumes and fully immersive spaces directly on your #Quest

medium.com/John 👁💚🔮⚕⚡☀️♻️🌳🔸/l…

Fun video from my experience seeing the #TotalSolarEclipse in Dallas, featuring tiny hand-made music and a digital painting. It was such a glorious experience! youtu.be/4iotWsaL9vA?si…

You can design #AppleVisionPro apps using ShapesXR & Figma on #MetaQuest devices! Check out how and get started for free yourself: open.substack.com/pub/spatialand…

You can design #AppleVisionPro apps using ShapesXR & Figma on #MetaQuest devices! Check out how and get started for free yourself: open.substack.com/pub/spatialand…