Lewis Tunstall

@_lewtun

🤗 LLM engineering & research @huggingface

📖 Co-author of "NLP with Transformers" book

💥 Ex-particle physicist

🤘 Occasional guitarist

🇦🇺 in 🇨🇭

ID:1029493180704714753

https://transformersbook.com/ 14-08-2018 22:21:16

3,2K Tweets

9,4K Followers

424 Following

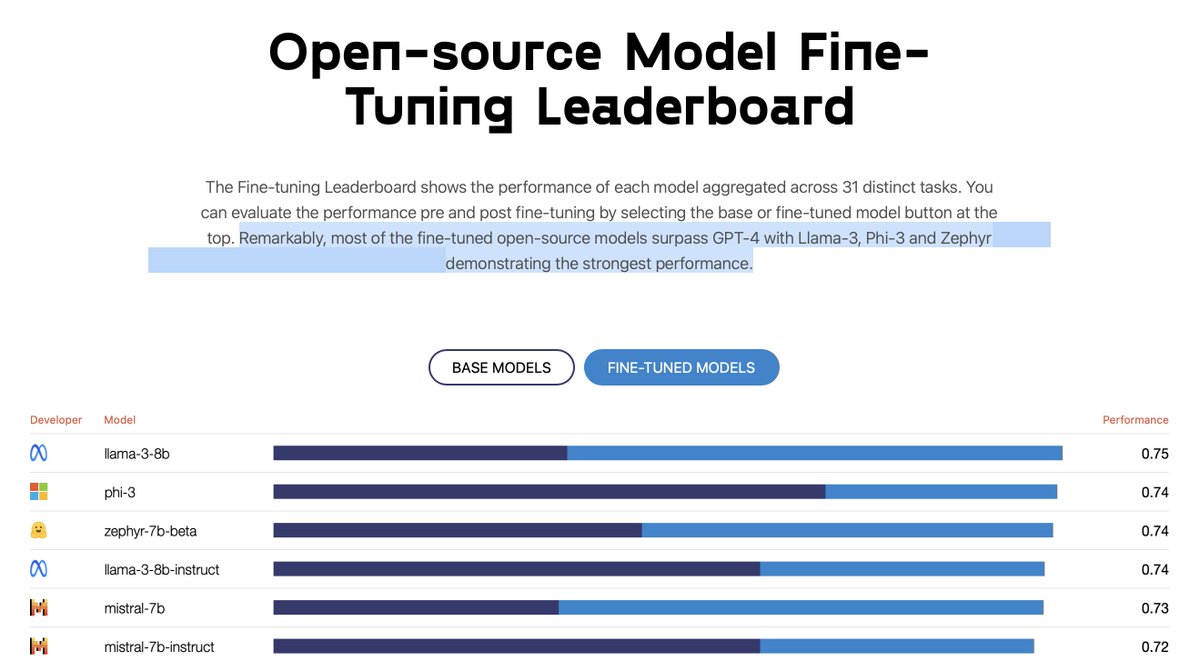

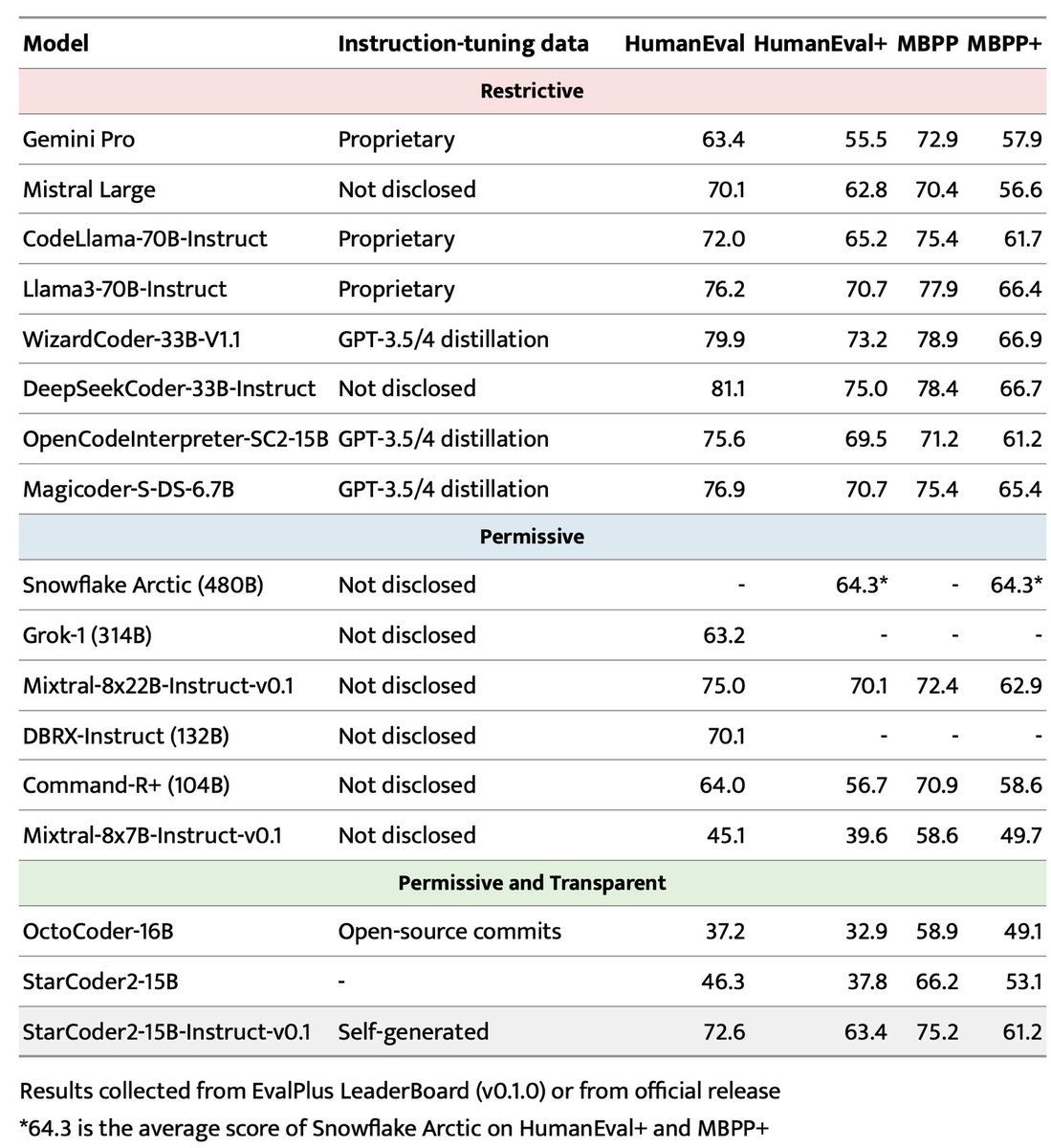

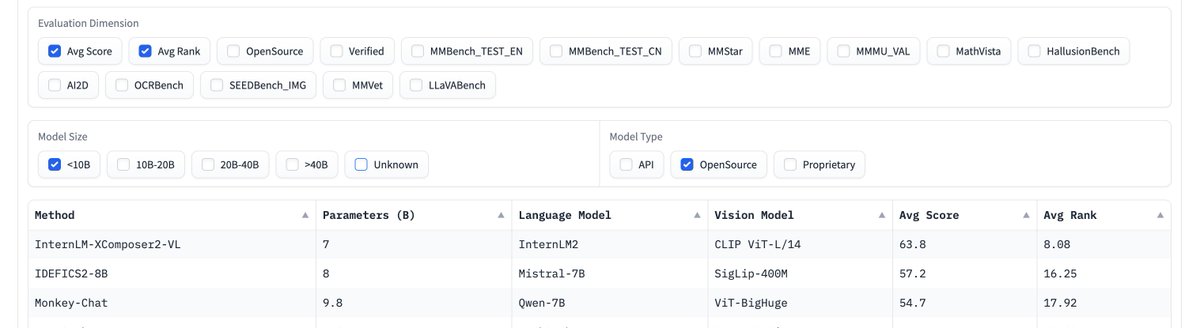

The Open LLM Leaderboard just crossed 10k likes, making it the second most popular Space on the Hub 🔥!

What started as an internal project by Edward Beeching has grown into a large-scale evaluation effort thanks to Clémentine Fourrier 🍊 Nathan and the whole open source

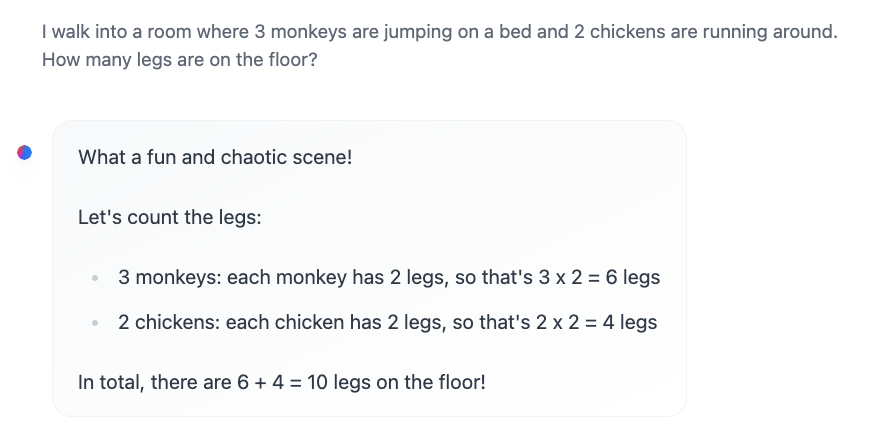

Looks like Cohere For AI provided a timely answer to my question :)

x.com/PSH_Lewis/stat…

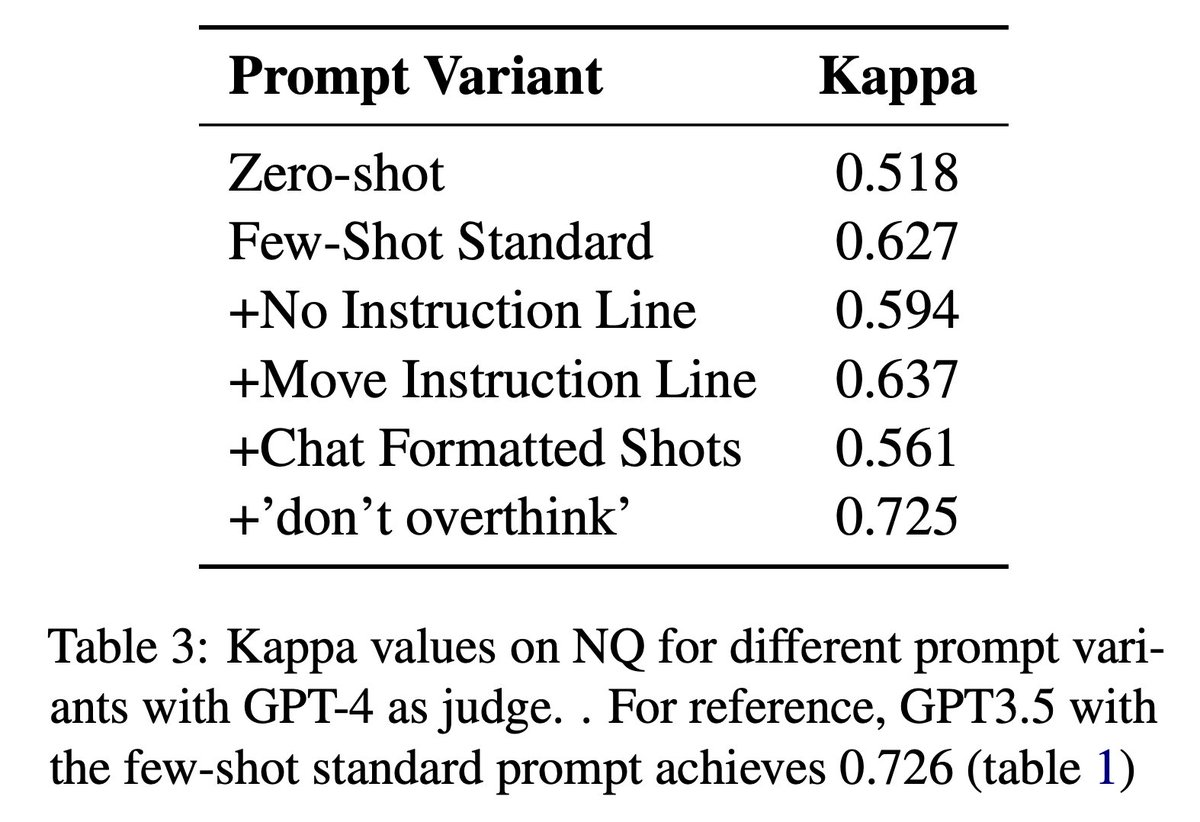

Lewis Tunstall lmsys.org Patrick Lewis sophiaalthammer Ola Piktus and others published a paper on arXiv today that advocates for using an ensemble of judges (panel of LLMs; PoLL). Their evaluation includes an ablation comparing different prompt variants.

arxiv.org/abs/2404.18796

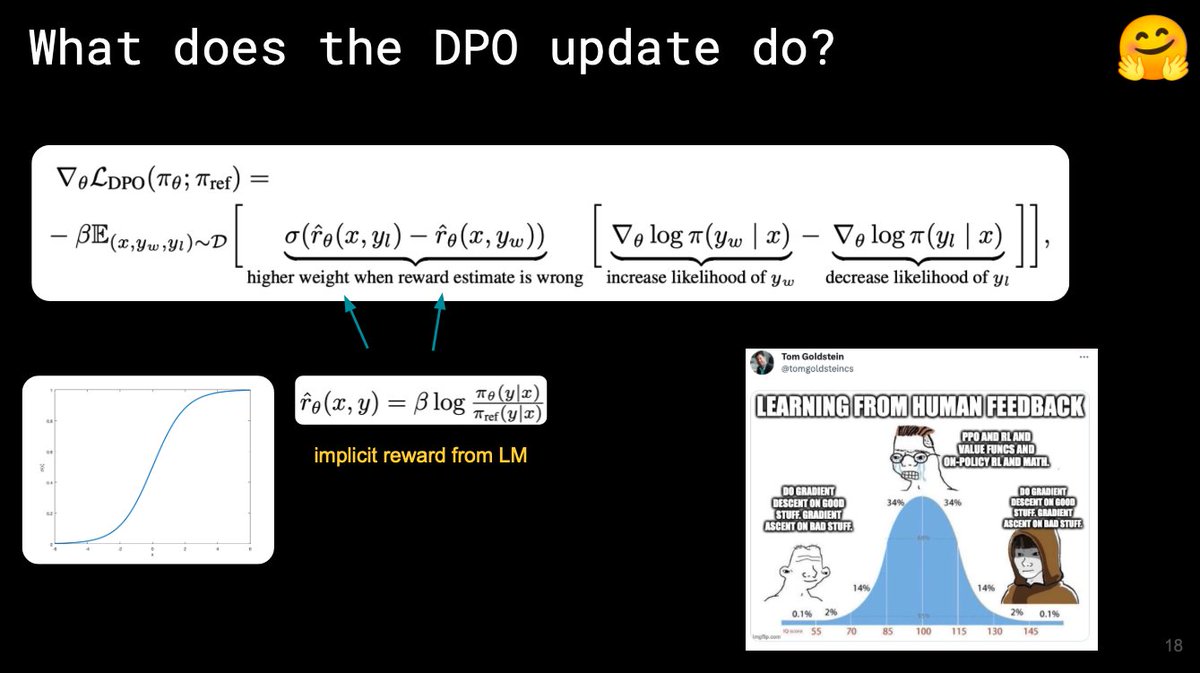

The best part about making slides on LLM alignment is that I now get to combine my two passions in life: math and memes 😅

(this one is a classic from Tom Goldstein)