Min-Hung (Steve) Chen

@CMHungSteven

Senior Research Scientist @NVIDIAAI @NVIDIA | Ex-@Microsoft Azure AI, @MediaTek AI | Ph.D. @GeorgiaTech | Multimodal AI/CV/DL/ML | https://t.co/dKaEzVoTfZ

ID:329683616

https://minhungchen.netlify.app/ 05-07-2011 13:26:04

594 Tweets

1,7K Followers

1,2K Following

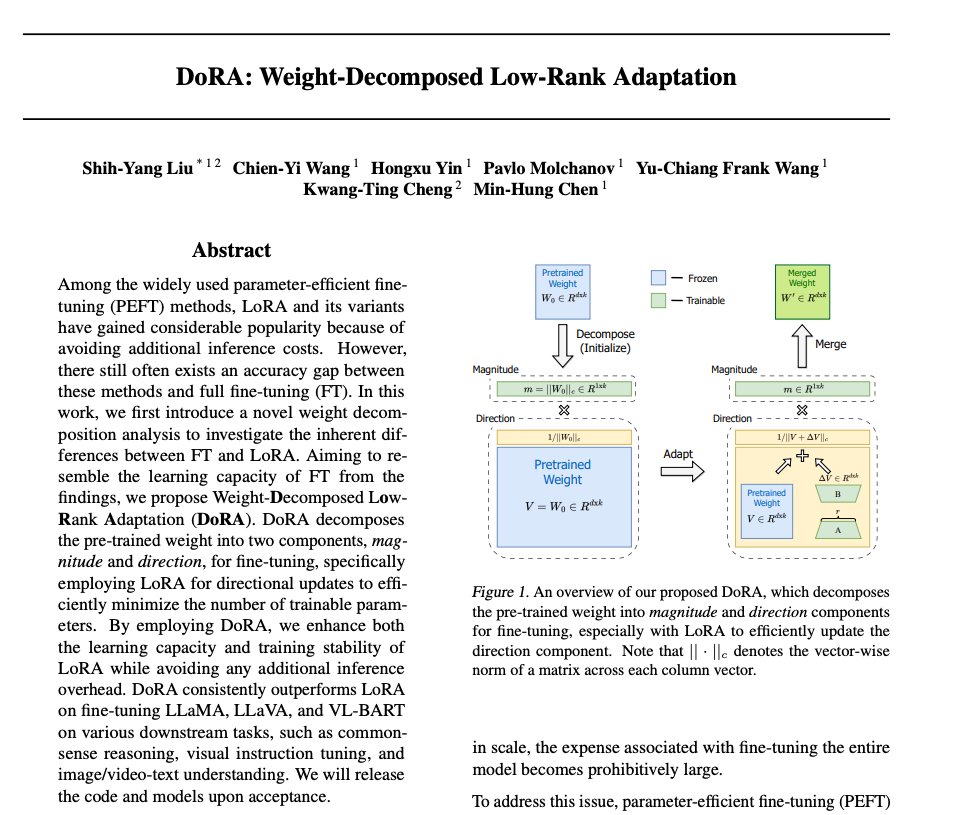

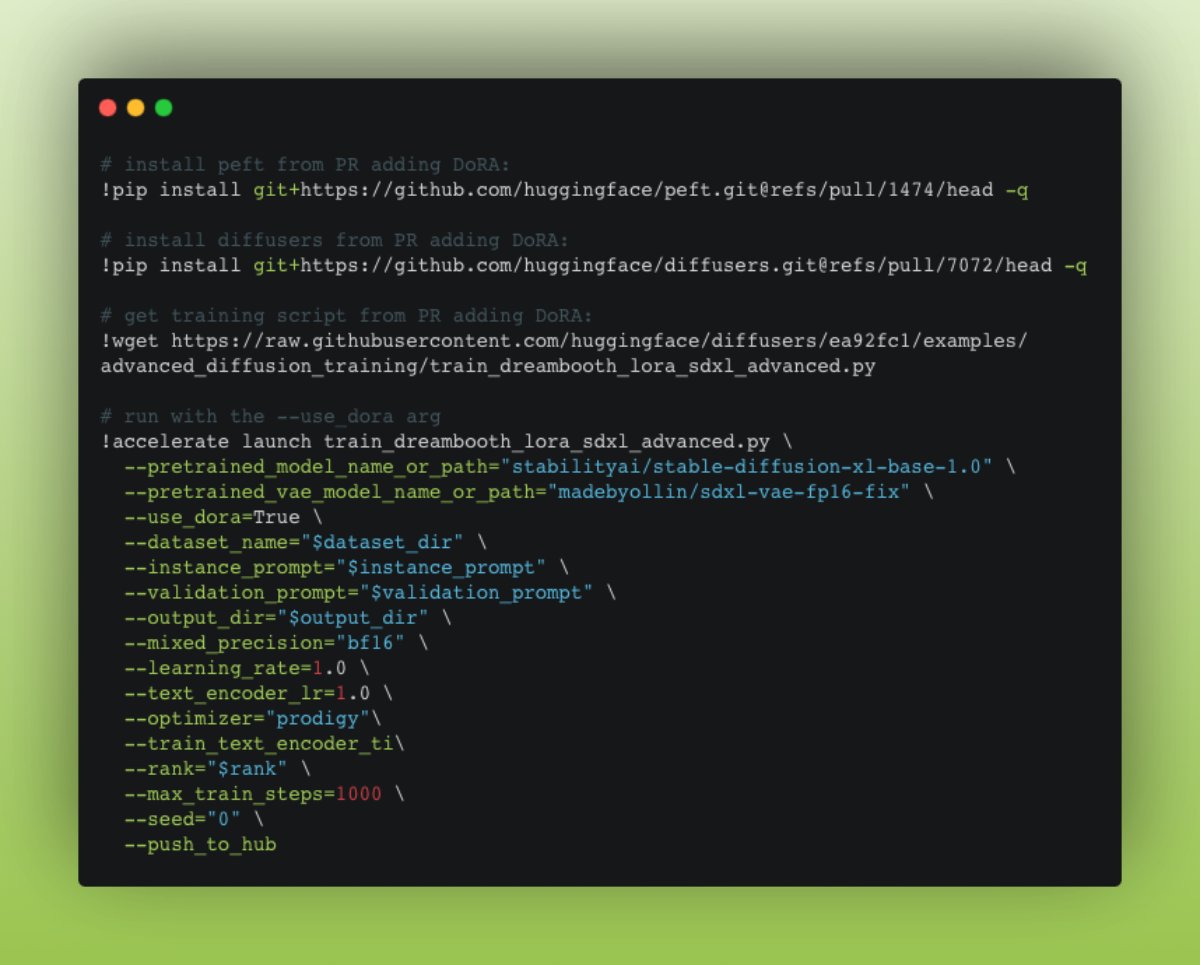

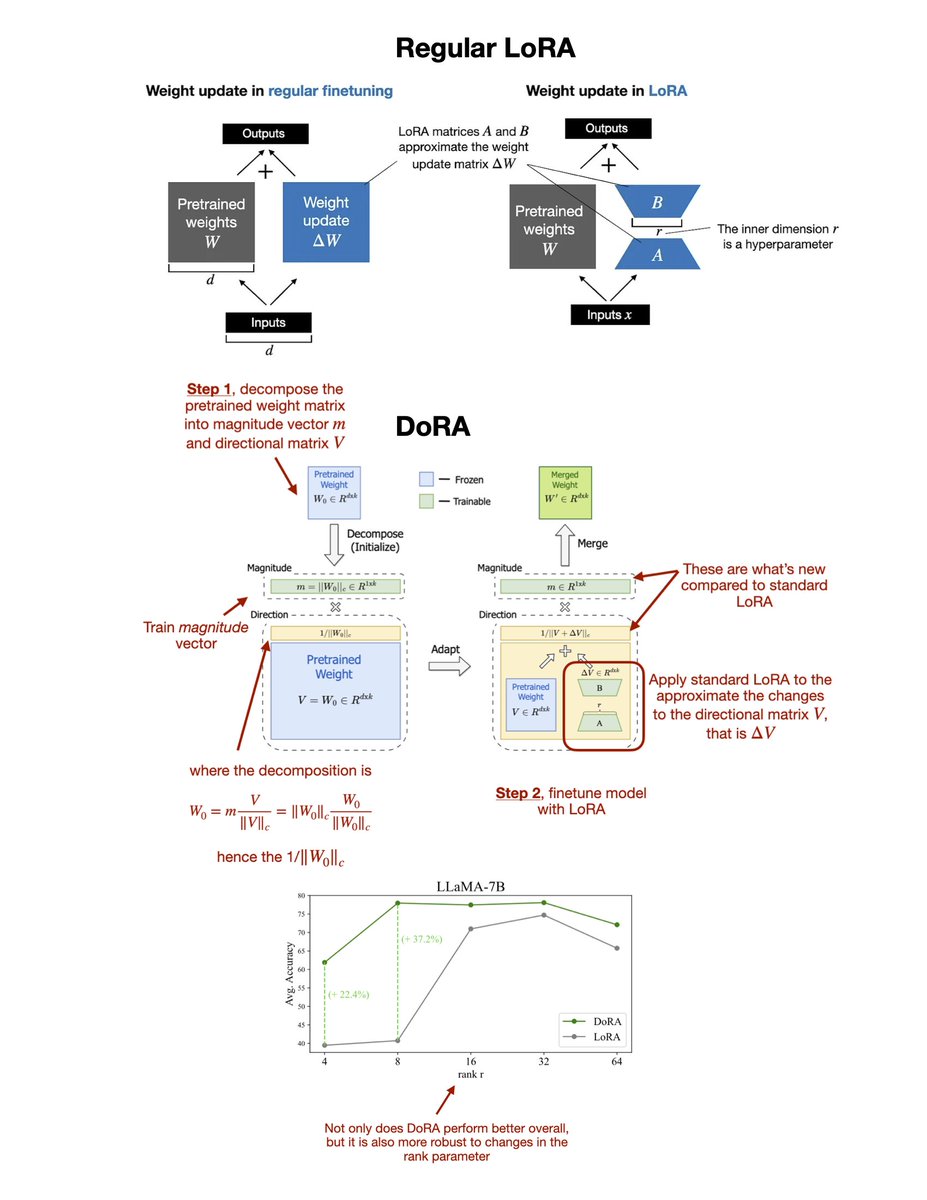

🚀 Time to upgrade your LoRA for free! Introducing DoRA #ICML2024 ! Outperforming LoRA across various models like LLaMA & LLaVA. Give it a quick try: github.com/NVlabs/DoRA

Thanks to Sebastian Raschka for the sharing! 🙌

#AI #MachineLearning #NVIDIAAI #PEFT ICML Conference

Thanks Leshem Choshen @LREC 🤖🤗 for sharing our DoRA ICML Conference, which could be the default replacement for LoRA!!

[TL;DR] DoRA outperforms LoRA with various backbones (e.g., LLaMA 1, 2, 3 & LLaVA, etc.)

[Code] github.com/NVlabs/DoRA

Stay tuned for more details!

#ICML2024 #icml #peft #lora #AI

![Min-Hung (Steve) Chen (@CMHungSteven) on Twitter photo 2024-05-03 01:11:45 Thanks @LChoshen for sharing our DoRA @icmlconf, which could be the default replacement for LoRA!! [TL;DR] DoRA outperforms LoRA with various backbones (e.g., LLaMA 1, 2, 3 & LLaVA, etc.) [Code] github.com/NVlabs/DoRA Stay tuned for more details! #ICML2024 #icml #peft #lora #AI Thanks @LChoshen for sharing our DoRA @icmlconf, which could be the default replacement for LoRA!! [TL;DR] DoRA outperforms LoRA with various backbones (e.g., LLaMA 1, 2, 3 & LLaVA, etc.) [Code] github.com/NVlabs/DoRA Stay tuned for more details! #ICML2024 #icml #peft #lora #AI](https://pbs.twimg.com/media/GMncrd3awAAQBHp.jpg)

[ #ICML2024 ]

Not satisfied with existing PEFT methods?

Try our DoRA ICML Conference, which outperforms LoRA with various backbones (e.g., LLaMA 1, 2, 3 & LLaVA, etc.)

[Code] github.com/NVlabs/DoRA

Stay tuned for more details!

NVIDIA AI #icml #peft #lora #AI

Thanks Sebastian Raschka for sharing!

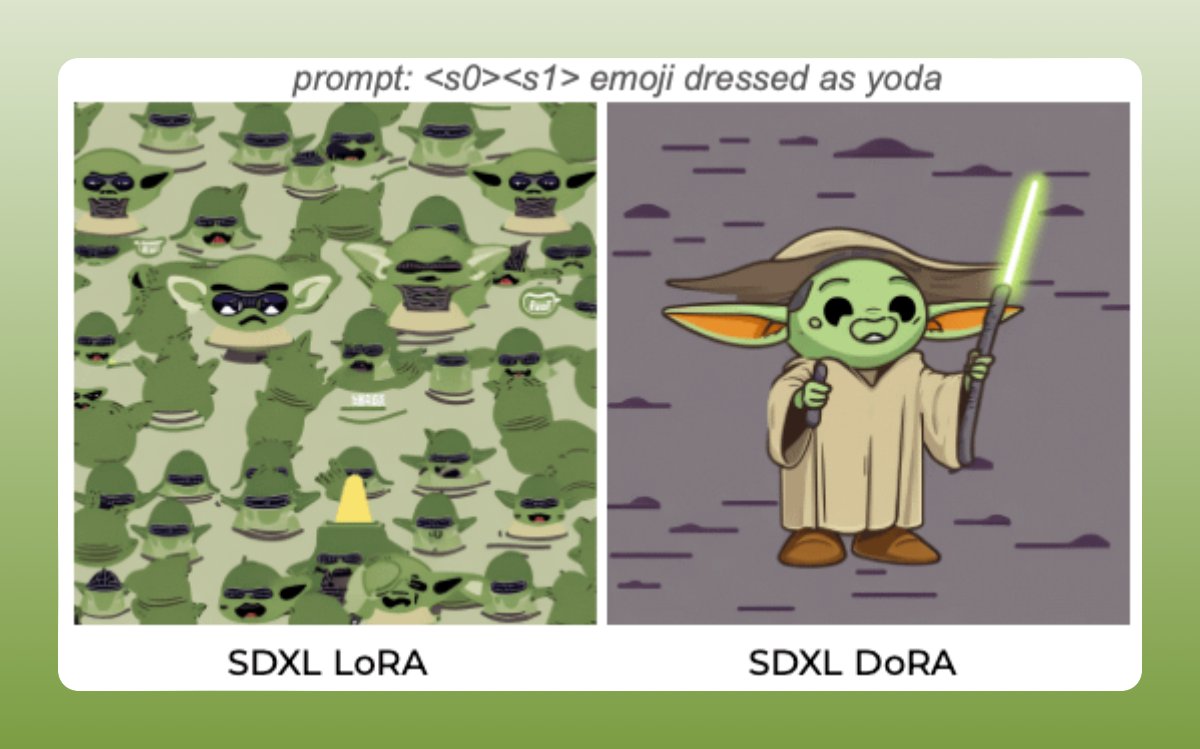

DoRA explores the magnitude and direction and

surpasses LoRA quite significantly

This is done with an empirical finding that I can't wrap my head around

NVIDIA AI

arxiv.org/abs/2402.09353

Shih-Yang Sean Liu Chien-Yi Wang @ ICLR 🇦🇹 Hongxu (Danny) Yin Pavlo Molchanov Min-Hung (Steve) Chen