Tianle Cai

@tianle_cai

ML PhD @Princeton. Life-long learner, hacker, and builder. Tech consultant & angel investor. Prev @togethercompute @GoogleDeepMind @MSFTResearch @citsecurities.

ID:3022633752

https://tianle.website 16-02-2015 15:32:35

385 Tweets

5,2K Followers

3,8K Following

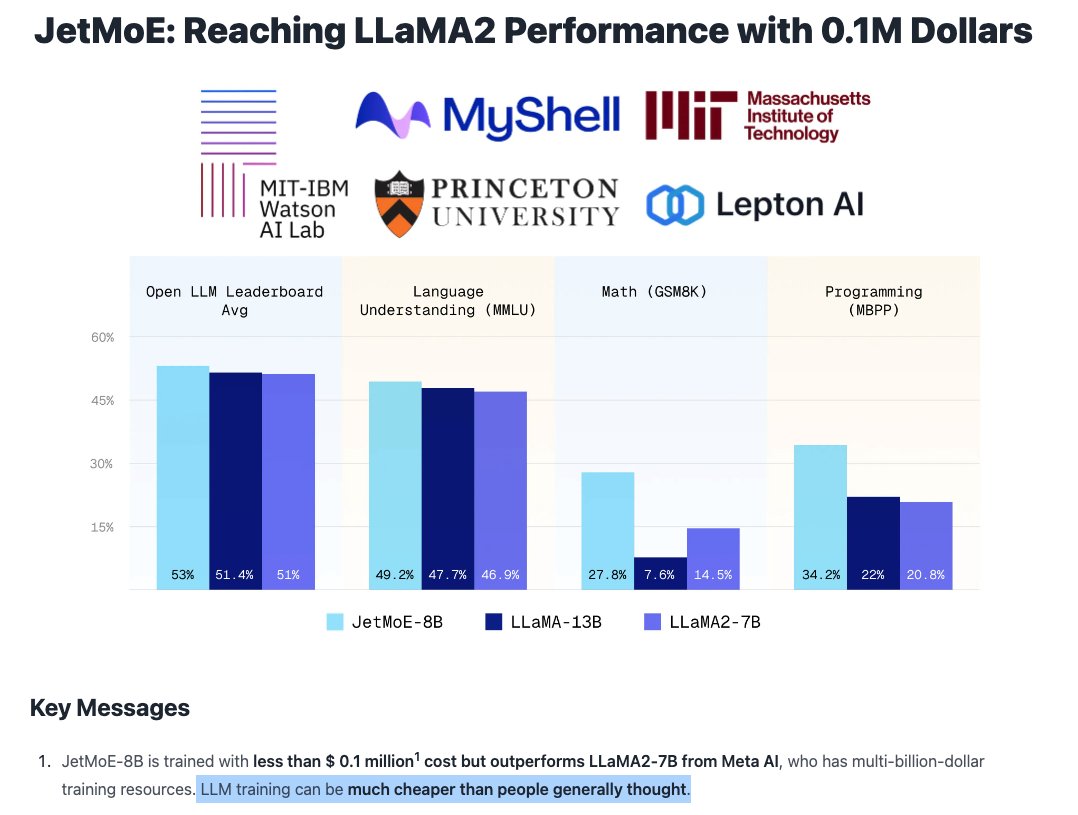

Aran Komatsuzaki I mostly agree with this post. Except the part that Moduleformer leads to no gain or performance degradation and instability. Could you point me to these results? Our version of MoA made two efforts to solve the stability issue: 1) use dropless moe, 2) share the kv projection…