infraX | $INFRA Alright, let's dive into the fascinating features of infraX | $INFRA!

5️⃣ 𝗙𝗘𝗔𝗧𝗨𝗥𝗘𝗦 𝗢𝗙 𝗜𝗻𝗳𝗿𝗮𝗫:

- GPU Lending

- GPU Rental

- AI Node Rental

- Pay as you go Rental

- Custom VM

- Staking

- H100 access

Let's take a close look at how they function…

Revolutionize your network management with AmpCon™! Effortlessly deploy and automate PicOS® switches remotely, while seamlessly managing your #InfiniBand H100 networks through a unified platform.

Learn More: bit.ly/4bFoAPa

#Networking #Tech #DataCenter

🎶Fine-tuning on Wikipedia Datasets🎶

I extract a dataset from wikipedia to fine-tuning Llama 3 on the Irish language.

Thanks to Daniel Han and Unsloth AI - I was able to fine-tune Llama 3 and get through 50k+ rows of data in just over an hour on an H100.

TIMESTAMPS:

0:00

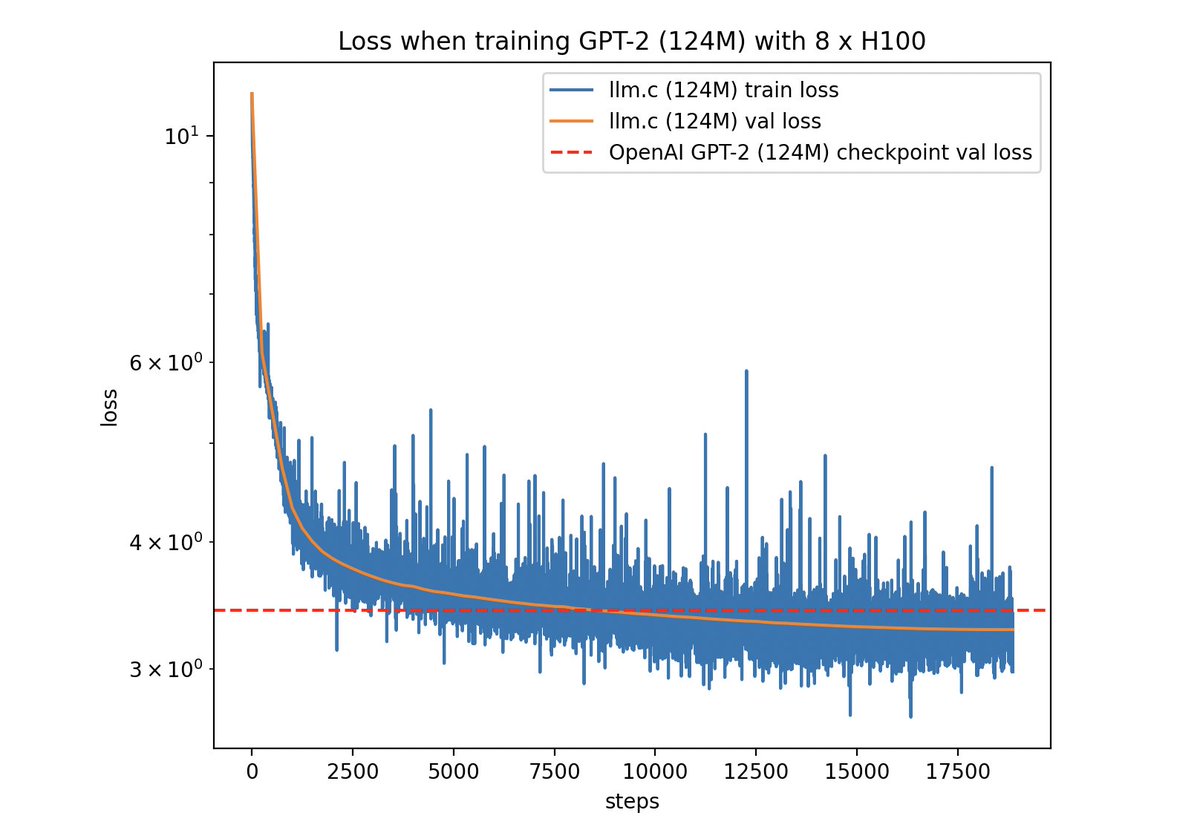

I trained GPT-2 (124M) using Andrej Karpathy's llm.c in just 43 minutes with 8 x H100 GPUs.

This is 2.1x faster than the 90 minutes it took with 8 x A100 GPUs. Currently, the cost of renting an H100 GPU is around $2.50/hr (under 1-year commitment), which reduces the training cost for